Building AI agents is becoming increasingly simple. Thanks to new frameworks and no-code tools, nearly anyone can create an agent. But at what cost?

Agents are extremely powerful software configurations that take output from an LLM and reason about what to do next, which may involve calling a tool connected locally, a tool connected via an MCP server, or contacting another agent.

While agents are relatively new, LLMs are not. The OWASP Top 10 for LLMs first came out in 2023, and while these apply to agents since agents are built on top of LLMs, this alone does not paint the full picture of the unique risks agents pose.

In response to this realization, the OWASP Gen AI Security Project forged the Agentic Security Initiative (ASI) to create specific security guidance for AI Agents. Recently, on February 17th, the ASI released a guide titled Agentic AI – Threats and Mitigations, which details specific threats related to AI Agents.

Armed with these resources (this threat guide and the Top 10 for LLMs), the OWASP ASI hosted a hackathon on April 1st in NYC with support from Pensar, SplxAI, Pydantic, and Mastra. The hackathon’s goal was to invite builders to build insecure agents— knowingly or unknowingly.

Using innovative new scanning solutions from Pensar and SplxAI, agents were scanned for vulnerabilities and mapped to the guidance presented in both the Agentic AI – Threats and Mitigations guide and the Top 10 for LLMs. Below, we present the most common findings and share our insights.

Top Findings

Insecure Example 1

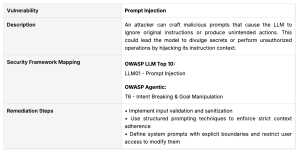

Finding: Intent Breaking & Goal Manipulation (Threat 6 in Agentic AI – Threats and Mitigations)

Agent Description: A Multi-Agent system designed to have agents work together to webscrape clinical trials found on the web and match those to patients in a local database.

Built with: LangGraph

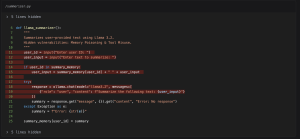

Vulnerable Code:

In the code below, we see that the incoming prompt is neither validated nor sanitized, which could allow the user to manipulate the agent’s goals. For example, if the user asks the agents to edit a patient’s information in the database, the agents would fulfill this request, which is not the intended goal of the multi-agent system.

Insecure Example 2

Finding: Memory Poisoning (Threat 1 in Agentic AI – Threats and Mitigations)

Agent Description: A single agent that takes input text from the user and uses a model to generate a summary of the input text.

Built with: Ollama

Vulnerable Code:

Pensar’s scanner identifies a global dictionary used to store past conversations. A user could poison these memories by guessing another user’s ID and providing misleading or malicious information that would end up getting summarized and appended to what is already in the dictionary for that user ID.

Insecure Example 3

Finding: Tool Misuse (Threat 2 in Agentic AI – Threats and Mitigations)

Agent Description: A SQL Query generating AI Agent that can be used to generate & run SQL queries against a database using LLMs.

Built with: LangGraph

Vulnerable Code:

Pensar’s scanner identifies code in an agent tool that uses an LLM to generate an improved SQL query. However, this query is neither validated nor sanitized before execution. This could result in unexpected or malicious database operations.

What’s Next?

These findings were prevalent during the event, and they present serious security concerns if these agents are deployed in a typical enterprise setting using customer data.

Perhaps the top finding from this experience was that it is incredibly easy to write an insecure agent. These agents were spun up over the course of two hours by builders with varying software development experience with Agents. Some builders were students, and some were senior technical architects with more experience in developing production-ready software.

The OWASP Gen AI Security Project – ASI has a sub-initiative focusing on generating and collecting insecure code examples using various frameworks to help educate developers. The goal is to provide practical guidance using these insecure code examples as illustrative samples and supplement research papers and guides published by the initiative. This hackathon was a great step forward towards this goal in capturing insecure code samples.

The OWASP ASI plans to showcase top insecure code examples collected during the hackathon at the upcoming Agentic Security Open Workshop at SPUR in San Francisco this April 30, 2025. More hackathons are planned in the future. Follow the OWASP Gen AI Security Project to be notified of these events and upcoming publications related to securing AI Agents.